Twitter Updated Its AI Chatbot. The Images Are A Dumpster Fire

It’s only been a few days since X (formerly Twitter), the social media site owned by Elon Musk, put out its newest iteration of its artificial intelligence chatbot Grok. Released Aug. 13, the new update, Grok-2, allows users to generate AI images with simple text prompts. The problem? The model has none of the average safety guardrails other popular AI models have. Simply put, people can do almost anything with Grok. And they are.

Grok is a generative artificial intelligence model — a system that learns on its own and creates new content based on what it’s learned. In the past two years, advancements in data processing and computer science have made AI models incredibly popular in the technology space, with both startups and established companies like Meta developing their own versions of the tool. But for X, that advancement has been marked by concern from users and professionals that the AI bot is taking things too far. In the days since Grok’s update, X has been filled with wild user-generated AI content, some of the most widespread content involving political figures.

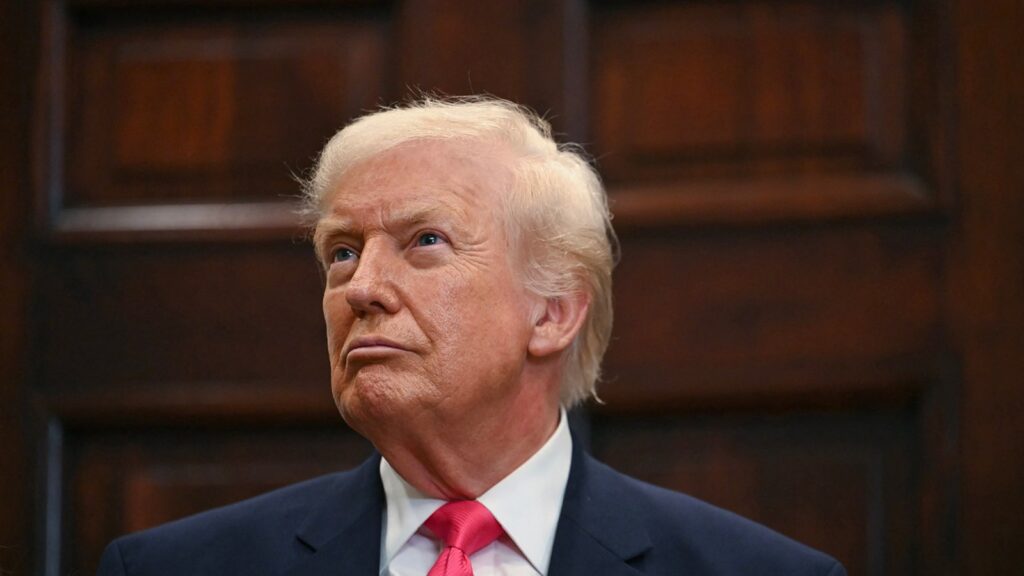

There have been AI images of former President Donald Trump caressing a pregnant Vice President Kamala Harris, Musk with Mickey Mouse holding up an AK-47 surrounded by pools of blood, and countless examples of suggestive and violent content. However, when concerned users on X pointed out the AI bot’s seemingly unchecked abilities, Musk took a blasé approach, calling it the “most fun AI in the world.” Now when users point out political content, Musk simply comments, with either “cool,” or laughing emojis. In one instance, when an X user posted an AI image of Musk seemingly pregnant with Trump’s child, the X owner responded with more laughing emojis and wrote “Well if I live by the sword, I should die by the sword.”

As researchers continue to develop the field of generative AI, there have been ongoing and increasingly concerned conversations about the ethical implications of it. During this U.S. presidential election season, experts have also expressed worries about how AI could influence or help spread problematic lies to voters. Musk specifically has been heavily criticized for sharing manipulated content. In July, the X owner posted a digitally altered clip of Vice President Harris, which used her voice to claim Harris called President Joe Biden senile and refer to Harris as “the ultimate diversity hire.” Musk did not add a disclaimer that the post was manipulated, sharing it with his 194 million X followers — a post that went against X’s stated guidelines prohibiting “synthetic, manipulated or out-of-context media that may deceive or confuse people and lead to harm.”

Though there have been issues with other generative models in the past, some of the most popular, like ChatGPT, have developed far stricter rules about what images they’ll allow users to generate. OpenAI, the company behind the model, does not allow people to generate images by mentioning political figures, or celebrities by name. Guidelines also prohibit people from using the AI to develop or use weapons. However, users on X have claimed that the Grok will produce images that promote violence and racism, like ISIS flags, politicians wearing Nazi insignia, and even dead bodies.

Nikola Banovic, an associate computer science professor at the University of Michigan, Ann Arbor, tells Rolling Stone that Grok’s problem isn’t the model’s lack of guardrails alone, but its wide accessibility as a bot that can be used with little to no training or tutorials.

“There’s no question that there’s a danger that these kinds of tools are now accessible to a broader public. They can effectively be used for misinformation and disinformation,” he says. “What makes it particularly challenging is that [models] are approaching the ability to generate something really realistic, perhaps even plausible, but that the general public might not have the kind of media or AI literacy to detect as disinformation. We are now approaching the stage where we have to more closely look at some of these images and try to better understand the context so that we as the public can detect when an image is not real.”

Representatives for X did not respond to Rolling Stone’s request for comment. Grok-2 and its mini version are currently in beta on X and are only available to participants who pay for X premium, but the company has released plans to continue developing the models further.

“This continues to be a broader discussion about what are some of the norms or ethics of creating [and deploying] these kinds of models,” Banovic adds. “But rarely do I hear ‘What is the responsibility of the AI platform owner who now takes this kind of technology and deploys it to the general public?’ And I think that is something that we need to discuss as well.”