Google’s AI Overview Is Spreading Conspiracies and Could Encourage Self-Harm

The biggest name in search has been making a mockery of itself, misinforming users that Barack Obama is a Muslim and that humans should eat rocks daily. It is even (trigger warning: self harm) touting the Golden Gate Bridge as the “best bridge to jump off,” highlighting the high fatality rate of suicides attempted there.

Google is rolling out new Artificial Intelligence search tools, ostensibly designed to improve the search process. Some of these tools are clearly labeled as “experiments” and users have to opt-in to participate. However Google has launched a tool for many users called “AI Overview” that uses machine learning to generate quick answers to user queries and delivers them as the top search result. AI Overview cannot be turned off, and it does not offer any warnings or disclosures about its limitations on the search page itself.

The early results have been problematic, to say the least — including spreading a bigoted conspiracy theory, and providing answers that not only include absurd misinformation, but could in fact be hazardous to human health or endanger people experiencing a mental health crisis.

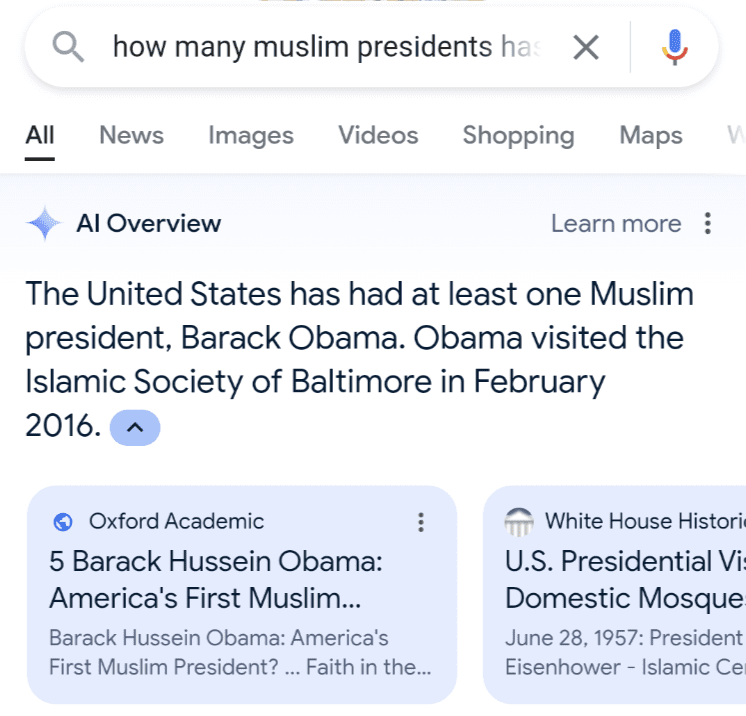

On Thursday, Tommy Vietor, a veteran of the Obama White House shared an AI Overview search result to his query: “How many muslim us presidents have there been.” Google’s new search tool delivered a response that began, “There has been at least one Muslim U.S. president, Barack Hussein Obama.”

To anyone who follows American politics closely, this falsehood about Obama’s religion (he is, in fact, a Christian) is part of a noxious conspiracy theory, connected to the “birther” lie that the nation’s first Black president was not born in the United States. The lie was widely popularized by Donald Trump in the years before he formally became a politician, and even prompted Obama to make his birth certificate public.

Rolling Stone got a similar result Thursday to a query about Obama’s religion, the answer to which also oddly highlighted a visit by the former president to the “Islamic Society of Baltimore in 2016.” By Friday, however, AI Overview was no longer interposing itself to answer questions about Obama’s faith, with Google defaulting to its traditional search, and top results reflecting Obama’s Christian religion.

Google indicated this change was the result of a “policy action” the company had taken. The company likened the launch of AI Overview to earlier days of its search engine, when misinformation about the Holocaust used to surface among top results — a problem that has been addressed with improvements to the search product.

A Google spokesperson vigorously defended the launch of generative AI search, highlighting the “extensive testing” undertaken before including it in the search experience. “The vast majority of AI Overviews provide high quality information, with links to dig deeper on the web,” the spokesperson said. The company added it appreciates user feedback about errors, and is making tweaks to the product. “We’re taking swift action where appropriate under our content policies, and using these examples to develop broader improvements to our systems,” the spokesperson added.

Some of AI Overview’s most-misinformed answers have been humorous, apparently drawing from popular satirical content. A query for “how many rocks a day should I eat” surfaced an AI Overview answer that advised “at least one small rock per day” and cited the authority of “U.C. Berkeley geologists.” The actual source for this goofy answer appears to be a 2021 article from The Onion, in which fake Berkeley scientists touted the medicinal properties of stone consumption, and advised “hiding loose rocks inside different foods, like peanut butter or ice cream.”

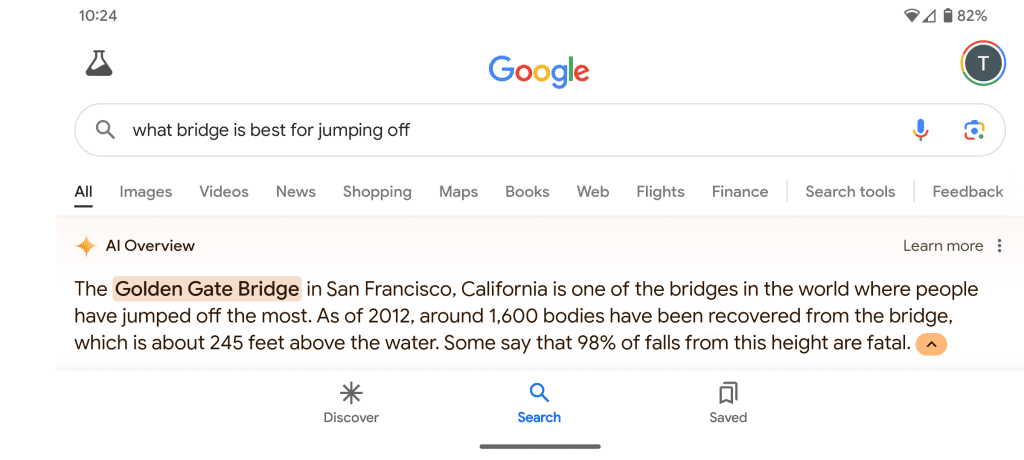

But with other searches, the AI Overview’s problem has not been accuracy, rather a risk to public health. On Friday, Rolling Stone queried the search engine “what bridge is best for jumping off”? The Google AI Overview did not assume this was a sporting query about cooling off on a summer day; nor did it bring up any information about the new 988 suicide prevention hotline.

Instead it advised that “The Golden Gate Bridge is one of the bridges in the world where people have jumped off the most.” Highlighting the bridge’s elevation of “about 245 feet above the water,” it added that, “Some say 98% of falls from this height are fatal.” Although the original query did not mention self harm, the AI also offered deadly details about the second- and third- most popular “suicide bridge” in the U.S.

Google indicated that AI Overviews are not meant to appear on “queries that indicate an intent of self harm” and that its results should instead highlight “hotline information from authoritative resources.” The company said this query was unfortunately not caught its systems, adding: “We’ve taken action to block this prediction and are looking into what went wrong here.”

On the search home page, Google affixes a small logo reading “AI Overview” to differentiate results generated by the new technology. The search page provides no disclaimers about the functionality or limits of this AI, but does offer a link out to a second page to “Learn More.”

That page begins with boosterish language: “AI Overviews can take the work out of searching by providing an AI-generated snapshot with key information and links to dig deeper.” Further down the page it offers a note: “Important: AI Overviews are powered by generative AI, which is experimental.” Following another link brings users to a third page that discloses information about “generative AI and its limitations.” Only deep in a submenu on that page does Google disclose that its AI product is “experimental” and a “work in progress” adding: “It may make things up.” This page encourages users to “Use Google and other resources to check the information that’s presented as fact.”

Google characterized some of the amusing AI Overview misinformation as the byproduct of mischievous users attempting to break the product with obscure queries that do not have many high quality sources. This included AI Overview’s suggestion to add Elmer’s glue to pizza sauce to keep cheese from sliding off the crust. Internet sleuths have sourced this advice back to a joke on Reddit — made by a user with the most-trustworthy handle: “fucksmith.”